Recovering an entire OSD node

A Ceph Recovery Story

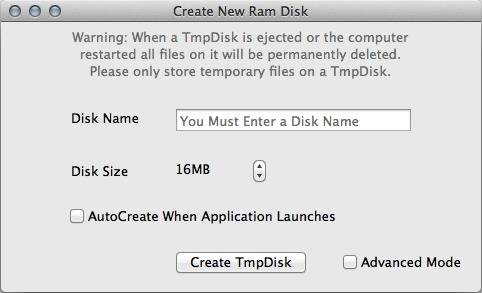

- Before using Flashcache, it might be a good idea to check if device mapper works properly. Assuming the partition /dev/md0p1 shall be used as flash cache, one may try to create a linear device with following command: echo 0 1024 linear /dev/md0p1 0 dmsetup create tmpdisk It this works, flashcachecreate should be able to create its device.

- # Let's create a 1GB (or what is best for you) filesystem file for the /tmp parition. Sudo fallocate -l 1G /tmpdisk sudo fallocate -l 1G /vartmpdisk sudo mkfs.ext4 /tmpdisk sudo mkfs.ext4 /vartmpdisk sudo chmod 0600 /tmpdisk sudo chmod 0600 /vartmpdisk # Mount the new /tmp partition and set the right permissions. Sudo mount -o loop,noexec.

- Nbdkit-tmpdisk-plugin - create a fresh temporary filesystem for each client. SYNOPSIS nbdkit tmpdisk size=SIZE type=ext4 xfs vfat. label=LABEL nbdkit tmpdisk size=SIZE command=COMMAND VAR=VALUE. DESCRIPTION. This nbdkit(1) plugin is used for creating temporary filesystems for thin clients. Each time a client connects it will see.

I wanted to share with everyone a situation that happened to me over the weekend. This is a tale of a disaster, shear panic, and recovery of said disaster. I know some may just want a quick answer to how “X” happened to me and how I did “Y” to solve it. But I truly believe that if you want to be a better technician and all around admin, you need to know the methodology of how the solution was found. Let get into what happened, why it happened, how I fixed it, and how to prevent this from happening again.

# /var/tmpdisk /tmp ext3 loop,rw,nosuid,noexec,nodev,quota 0 0 Since /var/tmpdisk is not a real device, it should be mounted as loop device, hence loop. Nosuid,noexec,nodev have been added as a layer of security to prevent common exploit kits from abusing /tmp.

What happened

It started with the simple need to upgrade a few packages on each of my cluster nodes (non Ceph related). Standard operating procedures dictate that this needs to be done on a not so traffic heavy part of the week. My cluster setup is small, consisting of 4 OSD nodes, an MDS and 3 monitors. Only wanting to upgrade standard packages on the machine, I decided to start with my first OSD node (lets just call it node1). I upgraded the packages fine, set the cluster to ceph old set noout, and proceeded to commence a reboot.

…Unbeknownst to me, the No.2 train from Crap city was about to pull into Pants station.

Reboot finishes, Centos starts to load up and then I go to try and login. Nothing. Maybe I typed the password wrong. Nothing. Caps lock? Nope. Long story short, changing my password in single user mode hosed my entire os. Thats fine though I can rebuild this node and re-add it back in. Lets mark these disk out one at a time, left the cluster heal, and add in a new node (after reinstalling the os).

After removing most of the disks, I notice I have 3 pgs stuck in a stale+active+clean state. What?!

The pool in this case is pool 11 (pg 11.48 – 11 here tells you what pool its from). Checking on the status of pool 11, I find it is inaccessible and it has caused an outage.

…and the train has arrived.

But why did this happen?

Panic sets in a bit, and I mentally start going through the 5 “whys” of troubleshooting.

- Problem – My entire node is dead and lost access to its disks and one of my pools is completely unavailable

- Why? And upgraded hosed the OS

- Why? Pool outage possibly was related to the OSD disks

- Why? Pool outage was PG related

What did all 3 of those PGs have in common? Their PG OSD disks all resided on the same node. But how? I have all my pools in CRUSH to replicate between hosts.

Note: You can see the OSDs that make up a PG at the end of the detail status for it ‘last acting [73,64,60]’

Holy crap, it never got set to the “hosts” crush rule 1.

How do we fix this

Lets update our whys.

- Problem – My entire node is dead and lost access to its disks and one of my pools is completely unavailable

- Why? And upgraded hosed the OS

- Why? Pool outage possibly was related to the OSD disks

- Why? Pool outage was PG related

- Why? Pool fail because 3 of its PG solely resided on a failed node. These disks were also marked out and removed from the cluster.

There you have it. We need to get the data back from these disks. Now a quick google of how to fix these PGs will quickly lead you to the most common answer, delete the pool and start over. Well thats great for people who don’t have anything in their pool. Well looks like I’m on my own here.

First thing I want to do is get this node installed back with an OS on it. Once thats complete I want to install ceph and deploy some admin keys to it. Now the tricky part, how to I get these disks to be added back in to the cluster and retain all their data and OSD IDs?

Well we need to map the effected OSDs back to their respective directories. Since this OS is fresh, lets recreate those directories. I need to recover data from osd 60,64,68,70,73,75,77 so we’ll make only these.

Thankfully I knew where each /dev/sd$ device gets mapped. If you don’t remember thats ok, each disk has a unique ID that can be found on the actual disk it self. Using that we can cross reference it with out actually cluster info!

Boom there we go, this disk belonged to osd.60, lets mount that to the appropriate cep directory /var/lib/ceph/osd/ceph-60

Ok so maybe you did like me and REMOVED the osd entirely from the cluster, how do I find out its OSD number then, well easier than you think. All you do is take that FSID number and use it to create a new OSD. Once complete it will return and create it with its original OSD id!

Ok so now what, well lets start one of our disks and see what happens (set it to debug 10 just in case)

Well it failed with this error

Well googling this resulted in nothing. So run it through the 5 whys and see if we can’t figure it out.

- Problem – OSD failed to obtain rotating keys. This sounds like a heartbeat/auth problem.

- Why? Other nodes may have issues seeing this one on the network. Nope this checks fine.

- Why? Heartbeat could be messed up, time off?

- Why? Time was off by 1 whole hour, this solved the issue.

Yay! Having a bad time set will make your keys (or tickets) look like they have expired during this handshake. Trying to start this osd again resulted in a journal error, not a big deal lets just recreate the journal.

Lets try one more time…

Success! How do I know? Because systemctl status ceph-osd@60 reports success and running ceph -s shows it as up and in.

Tmpdisk

Note: When adding these disks back to the crush map, set their weight to 0, so that nothing gets moved to them, but you can read what data you need off of them.

Tmpdisk Github

I repeated this will all the other needed OSDs, and after all the rebuilding and backfilling was done. I check the pool again and saw all my images! Thank god. I changed the pool to replicate at the host level by changing to the new crush rule.

I waited for it to rebalance the pool and my cluster status was healthy again! At this point I didn’t want to keep up just 8 OSDs on one node, so one by one I set them out and properly removed them from the cluster. Ill rejoin this node later once my cluster status goes back to being healthy.

TL:DR

I had a PG that was entirely on a failed node. By reinstalling the OS again on that node, I was able to manually mount up the old OSDs and add them to the cluster. This allowed me to regain access to vital data pools and fix the crush rule set that caused this to begin with.

Tmpdisk Slurm

Thank you for taking the time to read this. I know its lengthy but I wanted to detail to the best of my ability everything I encountered and went through. If it have any questions or just wanna say thanks, please feel free to send me an email at magusnebula@gmail.com.

Tmp Disk Osx

Source: Stephen McElroy (Recovering from a complete node failure)